2021 Federal Standard of Excellence

U.S. Department of Education

9

Leadership

Did the agency have senior staff members with the authority, staff, and budget to build and use evidence to inform the agency’s major policy and program decisions in FY21?

1.1 Did the agency have a senior leader with the budget and staff to serve as the agency’s Evaluation Officer (or equivalent)? (Example: Evidence Act 313)

- The Commissioner for the National Center for Education Evaluation and Regional Assistance (NCEE) serves as the Department of Education (ED) evaluation officer. ED’s Institute of Education Sciences (IES), with a budget of $642 million in FY21, is primarily responsible for education research, evaluation, and statistics. The NCEE Commissioner is responsible for planning and overseeing ED’s major evaluations and also supports the IES Director. IES employed approximately 150 full-time staff in FY21, including approximately 25 staff in NCEE.

1.2 Did the agency have a senior leader with the budget and staff to serve as the agency’s Chief Data Officer (or equivalent)? (Example: Evidence Act 202(e))

- USED has a designated Chief Data Officer (CDO). The Office of Planning, Evaluation and Policy Development’s (OPEPD) Office of the Chief Data Officer (OCDO) has grown from a staff of 12 two years ago to a staff of 33 FTE and detailees today. The Evidence Act provides a framework for OCDO’s responsibilities, which include lifecycle data management and developing and enforcing data governance policies. The OCDO has oversight over ED’s information collections approval and associated OMB clearance process. It is responsible for developing and enforcing ED’s open data plan, including management of a centralized comprehensive data inventory accounting for all data assets across ED. The OCDO is also responsible for developing and maintaining a technological and analytical infrastructure that is responsive to ED’s strategic data needs, exploiting traditional and emerging analytical methods to improve decision making, optimize outcomes, and create efficiencies. These activities are carried out by the Governance and Strategy Division, which focuses on data governance, lifecycle data management, and open data; and the Analytics and Support Division, which provides data analytics and infrastructure responsive to ED’s strategic data. The current OCDO budget reflects the importance of these activities to ED leadership, with S&E funding allocated for data governance, data analytics, open data, and information clearances.

1.3 Did the agency have a governance structure to coordinate the activities of its evaluation officer, chief data officer, statistical officer, performance improvement officer, and other related officials in order to support, improve, and evaluate the agency’s major programs?

- The EO, CDO, and SO meet monthly for the purposes of ensuring ongoing coordination of Evidence Act work. Each leader, or their designee, also participates in the PIO’s Strategic Planning and Review process. In FY21, the CDO is the owner of Goal 3 in ED’s strategic plan: “Strengthen the quality, accessibility, and use of education data through better management, increased privacy protections and transparency.” Leaders of the three embedded objectives come from OCDO, OCIO, and NCES.

- The Evidence Leadership Group (ELG) supports program staff that run evidence-based grant competitions and monitor evidence-based grant projects. It advises ED leadership and staff on how evidence can be used to improve ED programs and provides support to staff in the use of evidence. It is co-chaired by the Evaluation Officer and the OPEPD Director of Grants Policy. Both co-chairs sit on ED’s Policy Committee (described below). The SO, EO, CDO, and Performance Improvement Officer (PIO) are ex officio members of the ELG.

- The ED Data Governance Board (DGB) sponsors agency-wide actions to develop an open data culture and works to improve ED’s capacity to leverage data as a strategic asset for evidence building and operational decisions, including developing the capacity of data professionals in program offices. It is chaired by the CDO, with the SO, EO, and PIO as ex officio members.

- The ED CDO sits in OPEPD and the Evaluation Officer (EO) and the Statistical Official (SO) sit in the Institute for Education Sciences (IES). Both OPEPD and IES participate in monthly Policy Committee meetings which often address evidence-related topics. OPEPD advances the Secretary’s policy priorities including evidence, while IES is focused on (a) bringing extant evidence to policy conversations and (b) suggesting how evidence can be built as part of policy initiatives. OPEPD plays leading roles in the formation of ED’s policy positions as expressed through annual budget requests, grant competition priorities, including evidence. Both OPEPD and IES provide technical assistance to Congress to ensure evidence appropriately informs policy design.

9

Evaluation & Research

Did the agency have an evaluation policy, evaluation plan, and learning agenda (evidence-building plan), and did it publicly release the findings of all completed program evaluations in FY21?

2.1 Did the agency have an agency-wide evaluation policy? (Example: Evidence Act 313(d))

- The Department’s Evaluation Policy is posted online at ed.gov/data and can be directly accessed here. Key features of the policy include the Department’s commitment to: (1) independence and objectivity; (2) relevance and utility; (3) rigor and quality; (4) transparency; and (5) ethics. Special features include additional guidance to ED staff on considerations for evidence-building conducted by ED program participants, which emphasize the need for grantees to build evidence in a manner consistent with the parameters of their grants (e.g., purpose, scope, and funding levels), up to and including rigorous evaluations that meet WWC standards without reservations.

2.2 Did the agency have an agency-wide evaluation plan? (Example: Evidence Act 312(b))

- The Department’s FY22 Annual Evaluation Plan is posted at https://www.ed.gov/data under “FY22 Evidence-Building Deliverables.” The FY23 Plan will be posted there in February 2022, concurrent with the release of the President’s Budget.

2.3 Did the agency have a learning agenda (evidence-building plan) and did the learning agenda describe the agency’s process for engaging stakeholders including, but not limited to the general public, state and local governments, and researchers/academics in the development of that agenda? (Example: Evidence Act 312)

- The Department submitted its FY22-FY26 Learning Agenda in concert with its FY22-FY26 Strategic Plan to OMB in September 2021. The previous version is available here. In August 2021, ED published a Federal Register notice seeking comment on key topics within the Learning Agenda and will continue to seek stakeholder feedback on the document. ED will publish its Learning Agenda in February 2022 as part of the Agency’s Strategic Plan.

2.4 Did the agency publicly release all completed program evaluations?

- IES publicly releases all peer-reviewed publications from its evaluations on the IES website and also in the Education Resources Information Center (ERIC). Many IES evaluations are also reviewed by its What Works Clearinghouse. IES also maintains profiles of all evaluations on its website, both completed and ongoing, which include key findings, publications, and products. IES regularly conducts briefings on its evaluations for ED, the Office of Management and Budget, Congressional staff, and the public.

2.5 What is the coverage, quality, methods, effectiveness, and independence of the agency’s evaluation, research, and analysis efforts? (Example: Evidence Act 3115, subchapter II (c)(3)(9))

- The Department submitted its FY22-FY26 Capacity Assessment in concert with its FY22-FY26 Strategic Plan to OMB in September 2021. ED will publish its FY22-FY26 Capacity Assessment in February 2022 as part of the Agency’s Strategic Plan.

2.6 Did the agency use rigorous evaluation methods, including random assignment studies, for research and evaluation purposes?

- The IES website includes a searchable database of planned and completed evaluations, including those that use experimental, quasi-experimental, or regression discontinuity designs. All impact evaluations rely upon experimental trials. Other methods, including matching and regression discontinuity designs, are classified as rigorous outcomes evaluations. IES also publishes studies that are descriptive or correlational in nature, including implementation studies and less rigorous outcomes evaluations.

5

Resources

Did the agency invest at least 1% of program funds in evaluations in FY21?

3.1 ____ (Name of agency) invested $____ on evaluations, evaluation technical assistance, and evaluation capacity-building, representing __% of the agency’s $___ billion FY21 budget.

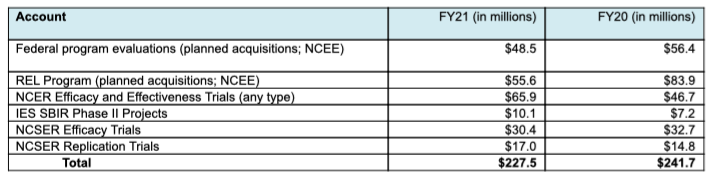

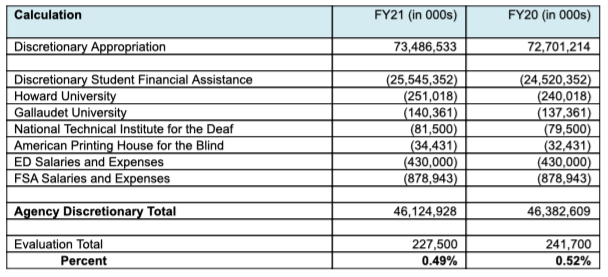

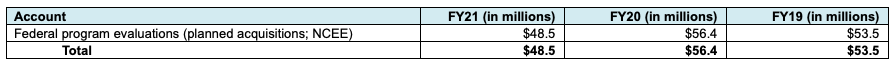

- ED invested $228 million in high-quality evaluations of federal programs, evaluations as part of field-initiated research and development, technical assistance related to evaluation and evidence-building, and capacity-building in FY21. This includes work awarded by the Regional Educational Laboratories ($55.6 million), NCEE’s Evaluation Division ($48.5 million), NCER Efficacy and Effectiveness Trials ($65.9 million), SBIR Phase II Projects ($10.1 million), NCSER Efficacy Trials ($30.4 million), and NCSER Replication Trials ($17 million).

3.2 Did the agency have a budget for evaluation and how much was it? (Were there any changes in this budget from the previous fiscal year?)

- ED does not have a specific budget solely for federal program evaluation. Evaluations are supported either by required or allowable program funds or by ESEA Section 8601, which permits the Secretary to reserve up to 0.5% of selected ESEA program funds for rigorous evaluation. The decrease in funds dedicated to federal program evaluations represents a combination of natural variation in resource needs across the lifecycle of individual evaluations and the need to slow some activities in response to school closures associated with the COVID-19 pandemic.

3.3 Did the agency provide financial and other resources to help city, county, and state governments or other grantees build their evaluation capacity (including technical assistance funds for data and evidence capacity building)?

- In June 2021, IES announced its most recent state-focused grant program, ALN 84.305S: Using Longitudinal Data to Support State Education Recovery Policymaking. As part of this program, the Institute of Education Sciences (IES) supports state agencies’ use of their state’s education longitudinal data systems as they and local education agencies reengage their students after the disruptions caused by COVID-19. State agencies can apply for these grants, on their own or in collaboration with other organizations, to support research to inform their decisions regarding issues, programs, and policies related to learning acceleration and recovery. The maximum award is $1 million over three years. To date, SLDS has awarded more than $721 million to 51 states and territories.

- The Regional Education Laboratories (RELs) provide extensive technical assistance on evaluation and support research partnerships that conduct implementation and impact studies on education policies and programs in ten geographic regions of the U.S., covering all states, territories, and the District of Columbia.

- Comprehensive Centers provide support to states in planning and implementing interventions through coaching, peer-to-peer learning opportunities, and ongoing direct support. The State Implementation and Scaling Up of Evidence-Based Practices Center provides tools, training modules, and resources on implementation planning and monitoring.

8

Performance Management / Continuous Improvement

Did the agency implement a performance management system with outcome-focused goals and aligned program objectives and measures, and did it frequently collect, analyze, and use data and evidence to improve outcomes, return on investment, and other dimensions of performance in FY21?

4.1 Did the agency have a strategic plan with outcome goals, program objectives (if different), outcome measures, and program measures (if different)?

- ED’s current FY18-22 Strategic Plan includes two parallel goals, one for P-12 and one for higher education (Strategic Objectives 1.4 and 2.2, respectively), that focus on supporting agencies and educational institutions in the identification and use of evidence-based strategies and practices. The OPEPD ELG co-chair is responsible for both strategic objectives. An FY22-26 Strategic Plan is under development and will be released in February 2022.

- All Department Annual Performance Reports (most recent fiscal year) and Annual Performance Plan (upcoming fiscal year) are located on ED’s website.

4.2 Did the agency use data/evidence to improve outcomes and return on investment?

- The Grants Policy Office in the Office of Planning, Evaluation and Policy Development (OPEPD) works with offices across ED to ensure alignment with the Secretary’s priorities, including evidence-based practices. The Grants Policy Office looks at where ED and the field can continuously improve by building stronger evidence, making decisions based on a clear understanding of the available evidence, and disseminating evidence to decision-makers. Specific activities include: strengthening the connection between the Secretary’s policies and grant implementation from design through evaluation; supporting a culture of evidence-based practices; providing guidance to grant-making offices on how to integrate evidence into program design; and identifying opportunities where ED and field can improve by building, understanding, and using evidence. The Grants Policy Office collaborates with offices across the Department on a variety of activities, including reviews of efforts used to determine grantee performance.

- ED is focused on efforts to disaggregate outcomes by race and other demographics and to communicate those results to internal and external stakeholders. For example, in Q1 of FY21, OCDO launched the Education Stabilization Fund (ESF) Transparency Portal at covid-relief-data.ed.gov, allowing ED to track performance, hold grantees accountable, and provide transparency to taxpayers and oversight bodies. The portal was updated in June 2021 to include Annual Performance Report (APR) data from CARES Act grantees allowing ED and the public to monitor support for students and teachers and track progress of the grantees. The portal content displays key data from the APRs, summarizing how the CARES Act funds were used by states and districts from March through September 2020, and by institutions of higher education from March through December 2020. The APR forms for the next data collection in FY22 provide Education Stabilization Fund (ESF) grantees with the opportunity to further disaggregate the data collected on the ESF funds. For example, the Elementary and Secondary School Emergency Relief (ESSER) form asks for counts of students that participated in various activities to support learning recovery or acceleration for subpopulations disproportionately impacted by the COVID-19 pandemic. Categories include students with one or more disabilities, low-income students, English language learners, students in foster care, migratory students, and students experiencing homelessness, and five race/ethnicity categories.

4.3 Did the agency have a continuous improvement or learning cycle processes to identify promising practices, problem areas, possible causal factors, and opportunities for improvement? (Examples: stat meetings, data analytics, data visualization tools, or other tools that improve performance)

- As part of its performance improvement efforts, senior career and political leadership convene quarterly in ongoing Performance Review (QPR) meetings. As part of the QPR process, the Performance Improvement Officer leads senior career and political officials in a review of ED’s progress towards its two-year Agency Priority Goals and four-year Strategic Goals. In each QPR, assembled leadership reviews metrics that are “below target” and brainstorm potential solutions–and celebrates progress toward achieving goals that are “on track” for the current fiscal year.

- The Department conducted after-action reviews after the FY19 and FY20 competition cycles to reflect on successes of the year as well as opportunities for improvement. The reviews resulted in process updates for FY21. In addition, the Department updated an optional internal tool to inform policy deliberations and progress on the Secretary’s policy priorities, including the use of evidence and data.

8

Data

Did the agency collect, analyze, share, and use high-quality administrative and survey data – consistent with strong privacy protections – to improve (or help other entities improve) outcomes, cost-effectiveness, and/or the performance of federal, state, local, and other service providers programs in FY21?

5.1 Did the agency have a strategic data plan, including an open data policy? (Example: Evidence Act 202(c), Strategic Information Resources Plan)

- The ED Data Strategy–the first of its kind for the U.S. Department of Education–was released in December of 2020. It recognized that we can, should, and will do more to improve student outcomes through more strategic use of data. The ED Data Strategy goals are highly interdependent with cross-cutting objectives requiring a highly collaborative effort across ED’s principal offices. The Strategy calls for strengthening data governance to administer the data it uses for operations, answer important questions, and meet legal requirements. To accelerate evidence-building and enhance operational performance, it requires that ED make its data more interoperable and accessible for tasks ranging from routine reporting to advanced analytics. The high volume and evolving nature of ED’s data tasks necessitate a focus on developing a workforce with skills commensurate with a modern data culture in a digital age. At the same time, safely and securely providing access for researchers and policymakers helps foster innovation and evidence-based decision making at the federal, state, and local levels.

- Goal 4 of the Data Strategy calls for ED to “Improve Data Access, Transparency, and Privacy.” Objective 1.4 under this goal is to “Develop and implement an Open Data Plan that describes the Departments efforts to make its data open to the public.” Improving access to ED data, while maintaining quality and confidentiality, is key to expanding the agency’s ability to generate evidence to inform policy and program decisions. Increasing access to data for ED staff, federal, state, and local lawmakers, and researchers can help ED make new connections and foster evidence-based decision making. Increasing access can also spur innovations that support ED’s stakeholders, provide transparency about ED’s activities, and serve the public good. ED seeks to improve user access by ensuring that open data assets are in a machine-readable, open format and accessible via its comprehensive data inventory. ED will better leverage expertise in the field to expand its base of evidence by establishing a process for researchers to access non-public data. Further, ED will develop a cohesive and consistent approach to privacy and enhance information collection processes to ensure that Department data are findable, accessible, interoperable, and reusable.

- ED continues to wait for Phase 2 guidance from OMB to understand required parameters for the open data plan. In the meantime, USED continues to release open data; the department soft launched the Open Data Platform in September 2020 and publicly released it in December 2020.

- ED launched a public transparency portal in November 2020 disclosing expenditures and grantee performance data for the Education Stabilization Fund authorized under the CARES Act and subsequent authorities.

- ED continues to draft its open data plan. When finalized, the plan will conform to new requirements when OMB Phase 2 guidance is released. If guidance is received soon, ED will publish its open data plan in FY22 within the agency’s Information Resource Management Strategic Plan.

- ED’s FY18-22 Performance Plan outlines strategic goals and objectives, including Goal #3: “Strengthen the quality, accessibility and use of education data through better management, increased privacy protections and transparency.” This currently serves as a strategic plan for ED’s governance, protection, and use of data while it develops the Open Data Plan required by the Evidence Act. The plan includes a metric on the number of data assets that are “open by default” as well as a metric on open licensing requirements for deliverables created with Department grant funds.

5.2 Did the agency have an updated comprehensive data inventory? (Example: Evidence Act 3511)

- The Open Data Platform (ODP) at data.ed.gov is the ED’s solution for publishing, finding, and accessing our public data profiles. This open data catalog brings together the Department’s data assets in a single location, making them available with their metadata, documentation, and APIs for use by the public. The ODP makes existing public data from all ED principal offices accessible to the public, researchers, and ED staff in one location. The ODP improves the Department’s ability to grow and operationalize its comprehensive data inventory while progressing on open data requirements. The Evidence Act requires government agencies to make data assets open and machine-readable by default. ODP is ED’s comprehensive data inventory satisfying these requirements while also providing privacy and security. ODP features standard metadata contained in Data Profiles for each data asset. Before new assets are added, data stewards conduct quality review checks on the metadata to ensure accuracy and consistency. As the platform matures and expands, ED staff and the public will find it a powerful tool for accessing and analyzing ED data, either through the platform directly or through other tools powered by its API. The 309 data profiles included in ODP, encompassing over 3,500 individual data sets, will add to the 619 entries the Department already has in the Federal Data Catalogue once ODP takes over the data inventory feed in Q1 of FY22.

- The ED Data Inventory (EDI) was developed in response to the requirements of M-13-13 and initially served ED’s external asset inventory. It describes data reported to ED as part of grant activities, along with administrative and statistical data assembled and maintained by ED. It includes descriptive information about each data collection along with information on the specific data elements in individual data collections.

- Information about Department data collected by the National Center for Education Statistics (NCES) has historically been made publicly available online. Prioritized data is further documented or featured on ED’s data page. NCES is also leading a government-wide effort to automatically populate metadata from Information Collection Request packages to data inventories. This may facilitate the process of populating EDI and comprehensive data inventory.

5.3 Did the agency promote data access or data linkage for evaluation, evidence-building, or program improvement? (Examples: Model data-sharing agreements or data-licensing agreements; data tagging and documentation; data standardization; downloadable machine-readable, de-identified tagged data; Evidence Act 3520(c))

- As ED collaboratively took stock of organizational data strengths and weaknesses, key themes arose and provided context for the development of the ED Data Strategy. The Strategy addresses new and emerging mandates such as open data by default, interagency data sharing, data standardization and other principles found in the Evidence Act and Federal Data Strategy. However, improving strategic data management has benefits far beyond compliance; solving persistent data challenges and making progress against a baseline data maturity assessment offers ED the opportunity to close capability gaps and enable staff to make evidence-based decisions.

- One of the first priorities for the ED Data Governance Board (DGB) in FY21 was to assess the current state of data maturity at ED. In early 2020, OCDO held “discovery” meetings with stakeholders from each ED office to capture information about successes and challenges in the current data landscape. This activity yielded over 300 data challenges and 200 data successes that provided a wealth of information to inform future data governance priorities. The DGB used the understanding gained of the ED data landscape during the discovery phase to develop a Data Maturity Assessment (DMA) for each office and the overall enterprise focusing on data and related data infrastructure in line with requirements in the Federal Data Strategy 2020 Action Plan. Data maturity is a metric that will be measured and reported as part of ED’s Annual Performance Plan. Several of these activities have been supported by ED’s investment in a Data Governance Board and Data Governance Infrastructure (DGBDGI) contract.

- The Education Stabilization Fund (ESF) Transparency Portal at covid-relief-data.ed.gov collects and reports survey data from grantees receiving funds for emergency relief from the COVID-19 pandemic and connects it with administrative data from usaspending.gov, College Scorecard, IPEDS, Common Core of Data, and ED’s G5 grants administration system. In February 2021, ED’s OCDO completed its first collection of annual performance reports (APRs) from state agencies and institutions of higher education that received CARES Act grants. OCDO created a data collection doorway in the portal to enable grantees to submit APRs on funding to institutions of higher education, State Education Agencies, and Governor’s Offices. Working in partnership with the Office of Postsecondary Education (OPE) and the Office of Elementary and Secondary Education (OESE), OCDO was able to achieve a 99.7% response rate from almost 4,900 higher education grantees and 100% response rate from State Education Agency grantees and Governor’s Offices grantees, ensuring comprehensive data on the use of funds to support student learning during the pandemic. An in-depth data quality review resulted in new technical assistance to grantees to improve reporting. The portal was updated in June 2021 to include APRs and data quality flags. OCDO and ED program offices continue to work with grantees to resolve data quality issues. ED also created internal dashboards customized to OPE, OESE, and policy leader needs for monitoring grant performance and outcomes.

- ED has also made concerted efforts to improve the availability and use of its data with the release of the revised College Scorecard that links data from NCES, the Office of Federal Student Aid, and the Internal Revenue Service. With recent updates in Q1 of FY21, the College Scorecard team improved the functionality of the tool to allow users to find, compare, and contrast different fields of study more easily, access expanded data on the typical earnings of graduates two years post-graduation, view median parent PLUS loan debt at specific institutions, and learn about the typical amount of federal loan debt for students who transfer. OCDO facilitated reconsideration of IRS risk assumptions to enhance data coverage and utility while still protecting privacy. The Scorecard enhancement discloses for prospective students how well borrowers from institutions are meeting their federal student loan repayment obligations, as well as how borrower cohorts are faring at certain intervals in the repayment process.

- IES continues to make available all data collected as part of its administrative data collections, sample surveys, and evaluation work. Its support of the Common Education Data Standards (CEDS) Initiative has helped to develop a common vocabulary, data model, and tool set for P-20 education data. The CEDS Open Source Community is active, providing a way for users to contribute to the standards development process.

5.4 Did the agency have policies and procedures to secure data and protect personal, confidential information? (Example: differential privacy; secure, multiparty computation; homomorphic encryption; or developing audit trails)

- IES is collaborating with an outside research team to conduct a proof of concept for multi-party computing. The Department’s general approach is to replicate an existing data collection that involves securely sharing PII across a number of partners using the MPC framework.

- The Disclosure Review Board (DRB), the EDFacts Governing Board, the Student Privacy Policy Office (SPPO), and SPPO’s Privacy Technical Assistance Center and Privacy Safeguards Team all help to ensure the quality and privacy of education data. In FY19, the ED Data Strategy Team also published a user resource guide for staff on disclosure avoidance considerations throughout the data lifecycle.

- In FY20, the ED DRB approved 59 releases by issuing “Safe to Release” memos. The DRB is in the process of developing a revised Charter that outlines its authority, scope, membership, process for dispute resolution, and how it will work with other DRBs in ED. The DRB is also developing standard operating procedures outlining the types of releases that need to be reviewed along with the submission and review process for data releases. The DRB is currently planning to develop information sessions to build the capacity of ED staff focusing on such topics as disclosure avoidance techniques used at ED, techniques appropriate for administrative and survey data, and how to communicate with stakeholders about privacy and disclosure avoidance.

- In ED’s FY18-22 Performance Plan, Strategic Objective 3.2 is to “Improve privacy protections for, and transparency of, education data both at ED and in the education community.” The plan also outlines actions taken in FY18. ED’s Student Privacy website assists stakeholders in protecting student privacy by providing official guidance on FERPA, technical best practices, and the answers to Frequently Asked Questions.

5.5 Did the agency provide assistance to city, county, and/or state governments, and/or other grantees on accessing the agency’s datasets while protecting privacy?

- ED’s new Open Data Platform makes Department data easily accessible to the public. Data is machine-readable and searchable by keyword in order to promote easy access to relevant data assets. In addition, the ODP features an API so that aggregators and developers can leverage Department data to provide information and tools for families, policy makers, researchers, developers, advocates and other stakeholders. ODP will ultimately include listings of non-public, restricted data with links to information on the privacy-protective process for requesting restricted-use access to these data.

- ED’s Privacy Technical Assistance Center (PTAC) responds to technical assistance inquiries on student privacy issues and provides online FERPA training to state and school district officials. FSA conducted a postsecondary institution breach response assessment to determine the extent of a potential breach and provide the institutions with remediation actions around their protection of FSA data and best practices associated with cybersecurity.

- The Institute of Education Sciences (IES) administers a restricted-use data licensing program to make detailed data available to researchers when needed for in-depth analysis and modeling. NCES loans restricted-use data only to qualified organizations in the United States. Individual researchers must apply through an organization (e.g., a university, a research institution, or company). To qualify, an organization must provide a justification for access to the restricted-use data, submit the required legal documents, agree to keep the data safe from unauthorized disclosures at all times, and to participate fully in unannounced, unscheduled inspections of the researcher’s office to ensure compliance with the terms of the License and the Security Plan form.

- The National Center for Education Statistics (NCES) provides free online training on using its data tools to analyze data while protecting privacy. Distance Learning Dataset Training includes modules on NCES’s data-protective analysis tools, including QuickStats, PowerStats, and TrendStats. A full list of NCES data tools is available on their website.

10

Common Evidence Standards / What Works Designations

Did the agency use a common evidence framework, guidelines, or standards to inform its research and funding purposes; did that framework prioritize rigorous research and evaluation methods; and did the agency disseminate and promote the use of evidence-based interventions through a user-friendly tool in FY21?

6.1 Did the agency have a common evidence framework for research and evaluation purposes?

- ED has an agency-wide framework for impact evaluations that is based on ratings of studies’ internal validity. ED evidence-building activities are designed to meet the highest standards of internal validity (typically randomized control trials) when causality must be established for policy development or program evaluation purposes. When random assignment is not feasible, rigorous quasi-experiments are conducted. The framework was developed and is maintained by IES’s What Works Clearinghouse TM (WWC). WWC standards are maintained on the WWC website. A stylized representation of the standards can be found here, along with information about how ED reports findings from research and evaluations that meet these standards.

- Since 2002, ED–as part of its compliance with the Information Quality Act and OMB guidance–has required that all “research and evaluation information products documenting cause and effect relationships or evidence of effectiveness should meet that quality standards that will be developed as part of the What Works Clearinghouse” (see Information Quality Guidelines).

6.2 Did the agency have a common evidence framework for funding decisions?

- ED employs the same evidence standards in all discretionary grant competitions that use evidence to direct funds to applicants that are proposing to implement projects that have evidence of effectiveness and/or to build new evidence through evaluation. Those standards, as outlined in the Education Department General Administrative Regulations (EDGAR), build on ED’s What Works ClearinghouseTM (WWC) research design standards.

6.3 Did the agency have a user friendly tool that disseminated information on rigorously evaluated, evidence-based solutions (programs, interventions, practices, etc.) including information on what works where, for whom, and under what conditions?

- ED’s What Works Clearinghouse TM (WWC) identifies studies that provide valid and statistically significant evidence of effectiveness of a given practice, product, program, or policy (referred to as “interventions”), and disseminates summary information and reports on the WWC website.

- The WWC has published more than 600 Intervention Reports, which synthesize evidence from multiple studies about the efficacy of specific products, programs, and policies. Wherever possible, Intervention Reports also identify key characteristics of the analytic sample used in the study or studies on which the Reports are based.

- The WWC has published 27 Practice Guides, which synthesize across products, programs, and policies to surface generalizable practices that can transform classroom practice and improve student outcomes.

- Finally, the WWC has completed more than 11,000 single study reviews. Each is available in a searchable database.

6.4 Did the agency promote the utilization of evidence-based practices in the field to encourage implementation, replication, and application of evaluation findings and other evidence?

- ED has several technical assistance programs designed to promote the use of evidence-based practices, most notably IES’s Regional Educational Laboratory Program and the Office of Elementary and Secondary Education’s Comprehensive Center Program. Both programs use research on evidence-based practices generated by the What Works Clearinghouse and other ED-funded Research and Development Centers to inform their work. RELs also conduct applied research and offer research-focused training, coaching, and technical support on behalf of their state and local stakeholders. Their work is reflected in Strategic Plan Objectives 1.4 and 2.2.

- Often, those practices are highlighted in WWC Practice Guides, which are based on syntheses (most recent meta-analyses) of existing research and augmented by the experience of practitioners. These guides are designed to address challenges in classrooms and schools.

- To ensure continuous improvement of the kind of TA work undertaken by the RELs and Comprehensive Centers, ED has invested in both independent evaluation and grant-funded research. The REL Program is currently undergoing evaluation, and design work for the next Comprehensive Center evaluation is underway. Addition, IES has awarded two grants to study and promote knowledge utilization in education, including the Center for Research Use in Education and the National Center for Research in Policy and Practice. In June of 2020, IES released a report on How States and Districts Support Evidence Use in School Improvement, which may be of value to technical assistance providers and SEA and LEA staff in improving the adoption and implementation of evidence-based practice.

- Finally, the ED developed revised evidence definitions and related selection criteria for competitive programs that align with ESSA to streamline and clarify provisions for grantees. These revised definitions align with ED’s suggested criteria for states’ implementation of ESSA’s four evidence levels, included in ED’s non-regulatory guidance, Using Evidence to Strengthen Education Investments. ED also developed a fact sheet to support internal and external stakeholders in understanding the revised evidence definitions. This document has been shared with internal and external stakeholders through multiple methods, including the Office of Elementary and Secondary Education ESSA technical assistance page for grantees.

6

Innovation

Did the agency have staff, policies, and processes in place that encouraged innovation to improve the impact of its programs in FY21?

7.1 Did the agency have staff dedicated to leading its innovation efforts to improve the impact of its programs?

- In FY19, the Office of Elementary and Secondary Education (OESE) made strategic investments in innovative educational programs and practices and administered discretionary grant programs. In FY19, the Innovation and Improvement account received $1.035 billion. ED reorganized in 2019, consolidating the Office of Innovation and Improvement into the Office of Elementary and Secondary Education. To lead and support innovation within the reorganized OESE, ED created the Evidence-Based Policy (EBP) team. EBP teams work within OESE and with colleagues across the agency to develop and expand efforts to inform policy and improve program practices.

- The Innovation and Engagement Team in the Office of the Chief Data Officer promotes data integration and sharing, making data accessible, understandable, and reusable; engages the public and private sectors on how to improve access to the Department’s data assets; develops solutions that provide tiered access based on public need and privacy protocols; develops consumer information portals and products that meet the needs of external consumers; and partners with OCIO and ED data stewards to identify and evaluate new technology solutions for improving collection, access, and use of data. This team led the ED work on the ESF transparency portal, highlighted above, and also manages and updates College Scorecard. The team is currently developing ED’s first Open Data Plan and a playbook for data quality at ED.

7.2 Did the agency have policies, processes, structures, or programs to promote innovation to improve the impact of its programs?

- A team of analysts within the Evidence-Based Practices unit (EBP) in OESE works to support the use of evidence and data in formula and discretionary grantmaking. This unit promotes evidence consistent with relevant provisions of the Elementary and Secondary Education Act of 1965 (ESEA) as amended by Every Student Succeeds Act (ESSA). EBP supports offices as they seek ways to meaningfully incorporate evidence and data routinely as part of their grantmaking agenda and to operationalize the ESSA evidence framework for strengthening the effectiveness of ESEA investments within OESE programs. This unit engages with offices to support internal capacity by convening staff for review and discussion of the most recent and relevant resources to support the use of evidence internally and by ED grantees and responding to individual office requests for resources and information related to generation and use of evidence within their programs. Offices in OESE also invite members of the EBP to share and discuss available resources with their grantees at various grantee convenings.

- The Education Innovation and Research (EIR) program is ED’s primary innovation program for K-12 public education. EIR grants are focused on validating and scaling evidence-based practices and encouraging innovative approaches to persistent challenges. The EIR program incorporates a tiered-evidence framework that supports larger awards for projects with the strongest evidence base as well as promising earlier-stage projects that are willing to undergo rigorous evaluation. Lessons learned from the EIR program have been shared across the agency and have informed policy approaches in other programs.

7.3 Did the agency evaluate its innovation efforts, including using rigorous methods?

- ED’s Experimental Sites Initiative is entirely focused on assessing the effects of statutory and regulatory flexibility in one of its most critical programs: Title IV Federal Student Aid programs. FSA collects performance and other data from all participating institutions, while IES conducts rigorous evaluations–including randomized trials–of selected experiments. Recent examples include ongoing work on Federal Work Study and Loan Counseling, as well as recently published studies on short-term Pell grants.

- The Education Innovation and Research (EIR) program, ED’s primary innovation program for K-12 public education, incorporates a tiered-evidence framework that supports larger awards for projects with the strongest evidence base as well as promising earlier-stage projects that are willing to undergo rigorous evaluation.

15

Use of Evidence in Competitive Grant Programs

Did the agency use evidence of effectiveness when allocating funds from its competitive grant programs in FY21?

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY21, the five largest competitive grant programs are:

- TRIO ($1.097 billion; eligible applicants: eligible grantees: institutions of higher education, public and private organizations);

- Charter Schools Program ($440 million; eligible grantees: varies by program, including state entities, charter management organizations, public and private entities, and local charter schools)

- GEAR UP ($368 million; eligible grantees: state agencies; partnerships that include IHEs and LEAs)

- Teacher and School Leader Incentive Program (TSL) ($200 million; eligible grantees: local education agencies, partnerships between state and local education agencies; and partnerships between nonprofit organizations and local educational agencies);

- Comprehensive Literacy Development Grants ($192 million; eligible grantees: state education agencies).

8.2 Did the agency use evidence of effectiveness to allocate funds in the five largest competitive grant programs? (e.g., Were evidence-based interventions/practices required or suggested? Was evidence a significant requirement?)

- ED uses evidence of effectiveness when making awards in its largest competitive grant programs.

- The vast majority of TRIO funding in FY21 was used to support continuation awards to grantees that were successful in prior competitions that awarded competitive preference priority points for projects that proposed strategies supported by: moderate evidence of effectiveness (Upward Bound and Upward Bound Math and Science); or evidence that demonstrates a rationale (Student Support Services). Additionally, ED will make new awards under the Talent Search and Educational Opportunity Centers programs. These competitions provide points for applicants that propose a project with a key component in its logic model that is informed by research or evaluation findings that suggest it is likely to improve relevant outcomes.

- Under the Charter Schools Program, ED generally requires or encourages applicants to support their projects through logic models–however, applicants are not expected to develop their applications based on rigorous evidence. Within the CSP program, the Grants to Charter School Management Organizations for the Replication and Expansion of High-Quality Charter Schools (CMO Grants) supports charter schools with a previous track record of success.

- For the 2021 competition for GEAR UP State awards, ED used a competitive preference priority for projects implementing activities that are supported by moderate evidence of effectiveness. For the 2021 competition for GEAR UP Partnership awards, ED used a competitive preference priority for projects implementing activities that are supported by Promising evidence.

- The TSL statute requires applicants to provide a description of the rationale for their project and describe how the proposed activities are evidence-based, and grantees are held to these standards in the implementation of the program.

- The Comprehensive Literacy Development (CLD) statute requires that grantees provide subgrants to local educational agencies that conduct evidence-based literacy interventions. ESSA requires ED to give priority to applicants that meet the higher evidence levels of strong or moderate evidence, and in cases where there may not be significant evidence-based literacy strategies or interventions available, for example in early childhood education, encourage applicants to demonstrate a rationale.

8.3 Did the agency use its five largest competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- The Evidence Leadership Group (ELG) advises program offices on ways to incorporate evidence in grant programs through encouraging or requiring applicants to propose projects that are based on research and by encouraging applicants to design evaluations for their proposed projects that would build new evidence.

- ED’s grant programs require some form of an evaluation report on a yearly basis to build evidence, demonstrate performance improvement, and account for the utilization of funds. For examples, please see the annual performance reports of TRIO, the Charter Schools Program, and GEAR UP. The Teacher and School Leader Incentive Program is required by ESSA to conduct a national evaluation. The Comprehensive Literacy Development Grant requires evaluation reports. In addition, IES is currently conducting rigorous evaluations to identify successful practices in TRIO-Educational Opportunities Centers and GEAR UP. In FY19, IES released a rigorous evaluation of practices embedded within TRIO-Upward Bound that examined the impact of enhanced college advising practices on students’ pathway to college.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant programs (besides its five largest grant programs)?

- The Education Innovation and Research (EIR) program supports the creation, development, implementation, replication, and taking to scale of entrepreneurial, evidence-based, field-initiated innovations designed to improve student achievement and attainment for high-need students. The program uses three evidence tiers to allocate funds based on evidence of effectiveness, with larger awards given to applicants who can demonstrate stronger levels of prior evidence and produce stronger evidence of effectiveness through a rigorous, independent evaluation. The FY21 competition included checklists and PowerPoints to help applicants clearly understand the evidence requirements.

- ED incorporates the evidence standards established in EDGAR as priorities and selection criteria in many competitive grant programs.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- The EIR program supports the creation, development, implementation, replication, and scaling up of evidence-based, field-initiated innovations designed to improve student achievement and attainment for high-need students. IES released The Investing in Innovation Fund: Summary of 67 Evaluations, which can be used to inform efforts to move to more effective practices. ED is exploring the results to determine what lessons learned can be applied to other programs.

8.6 Did the agency provide guidance which makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- In 2016, ED released non-regulatory guidance to provide state educational agencies, local educational agencies (LEAs), schools, educators, and partner organizations with information to assist them in selecting and using “evidence-based” activities, strategies, and interventions, as defined by ESSA, including carrying out evaluations to “examine and reflect” on how interventions are working. However, the guidance does not specify that federal competitive funds can be used to conduct such evaluations. Frequently, though, programs do include a requirement to evaluate the grant during and after the project period.

7

Use of Evidence in Non-Competitive Grant Programs

Did the agency use evidence of effectiveness when allocating funds from its non-competitive grant programs in FY21?

9.1 What were the agency’s five largest non-competitive programs and their appropriation amounts (and were city, county, and/or state governments are eligible to receive funds from these programs)?

- In FY21, the five largest non-competitive grant programs are:

- Title I Grants to LEAs ($16.5 billion; eligible grantees: state education agencies);

- IDEA Grants to States ($12.9 billion; eligible grantees: state education agencies);

- Supporting Effective Instruction State Grants ($2.1 billion; eligible grantees: state education agencies);

- Impact Aid Payments to Federally Connected Children ($1.34billion; eligible grantees: local education agencies);

- 21st Century Community Learning Centers ($1.3 billion; eligible grantees: state education agencies).

9.2 Did the agency use evidence of effectiveness to allocate funds in largest five non-competitive grant programs? (e.g., Are evidence-based interventions/practices required or suggested? Is evidence a significant requirement?)

- ED worked with Congress in FY16 to ensure that evidence played a major role in ED’s large non-competitive grant programs in the reauthorized ESEA. As a result, section 1003 of ESSA requires states to set aside at least 7% of their Title I, Part A funds for a range of activities to help school districts improve low-performing schools. School districts and individual schools are required to create action plans that include “evidence-based” interventions that demonstrate strong, moderate, or promising levels of evidence.

9.3 Did the agency use its five largest non-competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- ESEA requires a National Assessment of Title I– Improving the Academic Achievement of the Disadvantaged. In addition, Title I Grants require state education agencies to report on school performance, including those schools identified for comprehensive or targeted support and improvement.

- Federal law (ESEA) requires states receiving funds from 21st Century Community Learning Centers to “evaluate the effectiveness of programs and activities” that are carried out with federal funds (section 4203(a)(14)), and it requires local recipients of those funds to conduct periodic evaluations in conjunction with the state evaluation (section 4205(b)).

- The Office of Special Education Programs (OSEP), the implementing office for IDEA grants to states, has revised its accountability system to shift the balance from a system focused primarily on compliance to one that puts more emphasis on results through the use of Results Driven Accountability.

9.4 Did the agency use evidence of effectiveness to allocate funds in any other non-competitive grant programs (besides its five largest grant programs)?

- Section 4108 of ESEA authorizes school districts to invest “safe and healthy students” funds in Pay for Success initiatives. Section 1424 of ESEA authorizes school districts to invest their Title I, Part D funds (Prevention and Intervention Programs for Children and Youth Who are Neglected, Delinquent, or At-Risk) in Pay for Success initiatives; under the section 1415 of the same program, a State agency may use funds for Pay for Success initiatives.

9.5 What are the agency’s strongest examples of how non-competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- States and school districts are implementing the requirements in Title I of the ESEA regarding using evidence-based interventions in school improvement plans. Some States are providing training or practice guides to help schools and districts identify evidence-based practices.

9.6 Did the agency provide guidance which makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- In 2016, ED released non-regulatory guidance to provide state educational agencies, local educational agencies (LEAs), schools, educators, and partner organizations with information to assist them in selecting and using “evidence-based” activities, strategies, and interventions, as defined by ESSA, including carrying out evaluations to “examine and reflect” on how interventions are working. However, the guidance does not specify that federal non-competitive funds can be used to conduct such evaluations.

5

Repurpose for Results

In FY21, did the agency shift funds away from or within any practice, policy, interventions, or program that consistently failed to achieve desired outcomes?

10.1 Did the agency have policy(ies) for determining when to shift funds away from grantees, practices, policies, interventions, and/or programs that consistently failed to achieve desired outcomes, and did the agency act on that policy?

- The Department works with grantees to support implementation of their projects to achieve intended outcomes. The Education Department General Administrative Regulations (EDGAR) explains that ED considers whether grantees make “substantial progress” when deciding whether to continue grant awards. In deciding whether a grantee has made substantial progress, ED considers information about grantee performance. If a continuation award is reduced, more funding may be made available for other applicants, grantees, or activities.

10.2 Did the agency identify and provide support to agency programs or grantees that failed to achieve desired outcomes?

- The Department conducts a variety of technical assistance to support grantees to improve outcomes. Department staff work with grantees to assess their progress and, when needed, provide technical assistance to support program improvement. On a national scale, the Comprehensive Centers program, Regional Educational Laboratories, and technical assistance centers managed by the Office of Special Education Programs develop resources and provide technical assistance. The Department uses a tiered approach in these efforts, providing universal general technical assistance through a more general dissemination strategy; targeted technical assistance efforts that address common needs and issues among a number of grantees, and intensive technical assistance that is more focused on specific issues faced by specific recipients. The Department also supports program-specific technical assistance for a variety of individual grant programs.